The concept of data observability emerged in 2019 to meet the urgent requirement for comprehensive visibility and health monitoring within data and data systems. Data observability encompasses the continuous monitoring and analysis of data pipelines to detect and diagnose issues promptly, ensuring data integrity and reliability. This proactive approach is vital for optimizing decision-making processes and operational efficiency. Unlike traditional data monitoring, which focuses primarily on pipeline performance, data observability extends to data quality monitoring, enabling organizations to detect and address data issues before they impact critical operations and for this many turn to Acceldata.io. Moreover, data observability complements data reliability engineering efforts by providing continuous insights into data health and facilitating proactive maintenance of data systems. As organizations increasingly depend on data for strategic decision-making, the role of data observability in ensuring data quality and reliability continues to grow in significance.

Introduction to Data Observability

Data observability is focused on delivering full visibility into the health of data and data systems, enabling organizations to swiftly detect and diagnose issues within their data pipelines. By promptly identifying problems, data observability facilitates quicker resolution and enhances the overall reliability of data. This proactive approach ensures that organizations can maintain data integrity and operational efficiency, minimizing the impact of potential disruptions on business processes. Through continuous monitoring and analysis, data observability empowers teams to optimize data pipelines and improve decision-making processes based on reliable and accurate data insights.

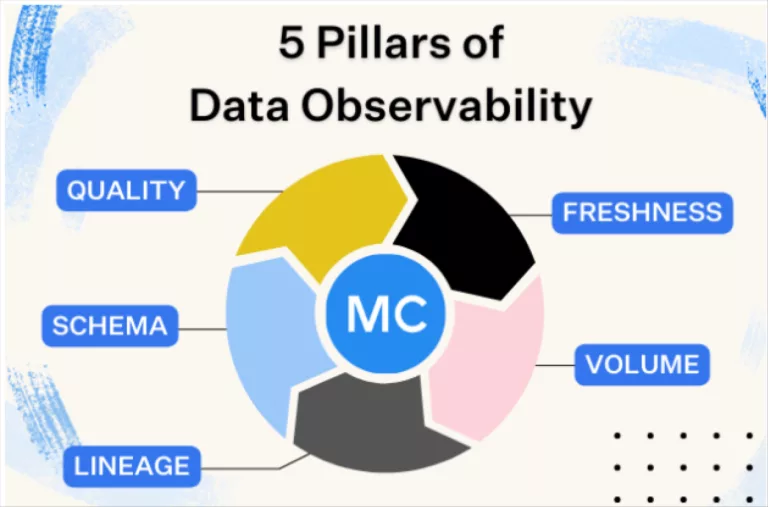

The Five Pillars of Data Observability

Freshness

Assessing data freshness involves evaluating its timeliness, ensuring that organizations have up-to-date information for making real-time decisions and avoiding reliance on outdated data. Timely data is crucial across industries, enabling prompt responses to market dynamics and enhancing operational efficiency.

Quality

Assessing data integrity involves evaluating its quality by considering factors such as completeness, uniqueness, and adherence to expected standards or ranges. This ensures that the data is accurate, reliable, and suitable for its intended use within organizational workflows, supporting effective decision-making and operational processes.

Volume

Monitoring data completeness involves analyzing changes in dataset sizes to ensure consistent and expected data volumes over time. This proactive approach supports data integrity and reliability, enabling organizations to rely on accurate information for decision-making and operational efficiency.

Schema

Tracking modifications to data structures or schemas is important because these changes often indicate potential issues within the data ecosystem. Monitoring these modifications helps organizations identify and address data inconsistencies or errors early on, ensuring data integrity and system reliability. This proactive approach supports informed decision-making and operational success.

Lineage

Data lineage provides valuable information about where data comes from and how it moves through systems. This insight helps pinpoint the source of problems and understand how data dependencies are structured. By tracing data lineage, organizations can effectively track data flow, identify bottlenecks or issues, and ensure that data is accurate and reliable throughout its journey. Understanding data lineage is essential for maintaining data quality and making informed decisions based on trustworthy information.

Importance of Data Observability

Data observability is crucial for both data engineers and consumers. For engineers, it reduces the time and resources wasted on troubleshooting data issues, while for consumers, it enhances trust in decision-making processes by ensuring data accuracy and reliability.

Key Features of Data Observability Tools

Effective data observability tools should:

- Seamlessly integrate with existing data stacks without requiring extensive modifications.

- Monitor data in its current storage location, ensuring scalability and security.

- Utilize machine learning for automated anomaly detection, minimizing false positives.

- Provide rich contextual information for quick incident resolution and impact analysis.

Data Observability vs. Data Testing

Data testing is a crucial step in ensuring data quality by identifying known issues or anomalies that can be anticipated based on predefined criteria. However, data observability goes beyond traditional testing by focusing on detecting unforeseen issues or anomalies that may not be explicitly known or anticipated (“unknown unknowns”). This proactive approach involves continuous monitoring and analysis of data pipelines, looking for any irregular patterns, unexpected changes, or emerging issues that could impact data integrity or reliability. By leveraging machine learning and anomaly detection techniques, data observability helps organizations uncover hidden problems that traditional testing methods may overlook. This comprehensive monitoring not only enhances data quality but also improves overall system resilience and enables quicker responses to emerging data challenges.

Data Observability vs. Data Monitoring

Data monitoring involves tracking the operational aspects and behavior of data pipelines, such as performance metrics and throughput rates. On the other hand, data observability expands beyond mere pipeline tracking by encompassing comprehensive monitoring of data quality alongside pipeline performance. This broader approach ensures that not only are data pipelines functioning optimally, but the data flowing through them is also accurate, complete, and consistent. By combining monitoring of both pipeline behavior and data quality, organizations can proactively identify and address issues that may impact data integrity and reliability, ultimately supporting more informed decision-making and operational excellence.

Data Observability vs. Data Quality

Data observability works hand-in-hand with data quality efforts by providing ongoing monitoring and proactive detection of data issues. This continuous oversight helps improve overall data quality and reliability within organizational systems. By keeping a watchful eye on data pipelines and processes, data observability helps identify potential issues early on, allowing for timely interventions to maintain data integrity. This proactive approach supports organizations in ensuring that the data used for decision-making and operational purposes remains accurate, consistent, and trustworthy. Through consistent monitoring and intervention, data observability contributes to enhancing the overall effectiveness and reliability of data-driven operations.

Signs You Need a Data Observability Platform

Consider adopting a data observability platform if:

- Your data platform has migrated to the cloud.

- Your data stack is expanding in complexity.

- Your team spends significant time resolving data quality issues.

- Data is integral to your company’s value proposition.

The Future of Data Observability

Data observability is a rapidly evolving field that is becoming essential in modern data management practices. As organizations increasingly rely on data for operations and decision-making, the importance of data observability in ensuring data quality and reliability cannot be overstated. This discipline focuses on providing comprehensive visibility into data pipelines, enabling proactive monitoring and intervention to address potential issues and optimize data-driven processes.

Conclusion

Data observability plays a crucial role in enabling organizations to effectively manage and optimize their data ecosystems. By implementing data observability practices, businesses can proactively monitor and maintain the health and performance of their data pipelines and systems. This proactive approach fosters confidence in data-driven decision-making by ensuring that the data used is accurate, reliable, and up-to-date. Additionally, data observability helps organizations identify and address potential issues early on, minimizing the risk of data errors or inconsistencies impacting operational processes. Ultimately, embracing data observability paves the way for more efficient and reliable data operations, supporting overall business success and competitiveness in an increasingly data-driven world.